AlgoX2 Raises $3.5M Seed Round to Redefine Data Streaming for the AI Era

AlgoX2 has raised $3.5 million in seed funding, led by Bessemer Venture Partners, to build a new standard of fast, scalable, and durable data streaming infrastructure for an AI-native world.

We're thrilled to share that AlgoX2 has raised $3.5 million in seed funding, led by Bessemer Venture Partners, to build a new standard of fast, scalable, and durable data streaming infrastructure for an AI-native world.

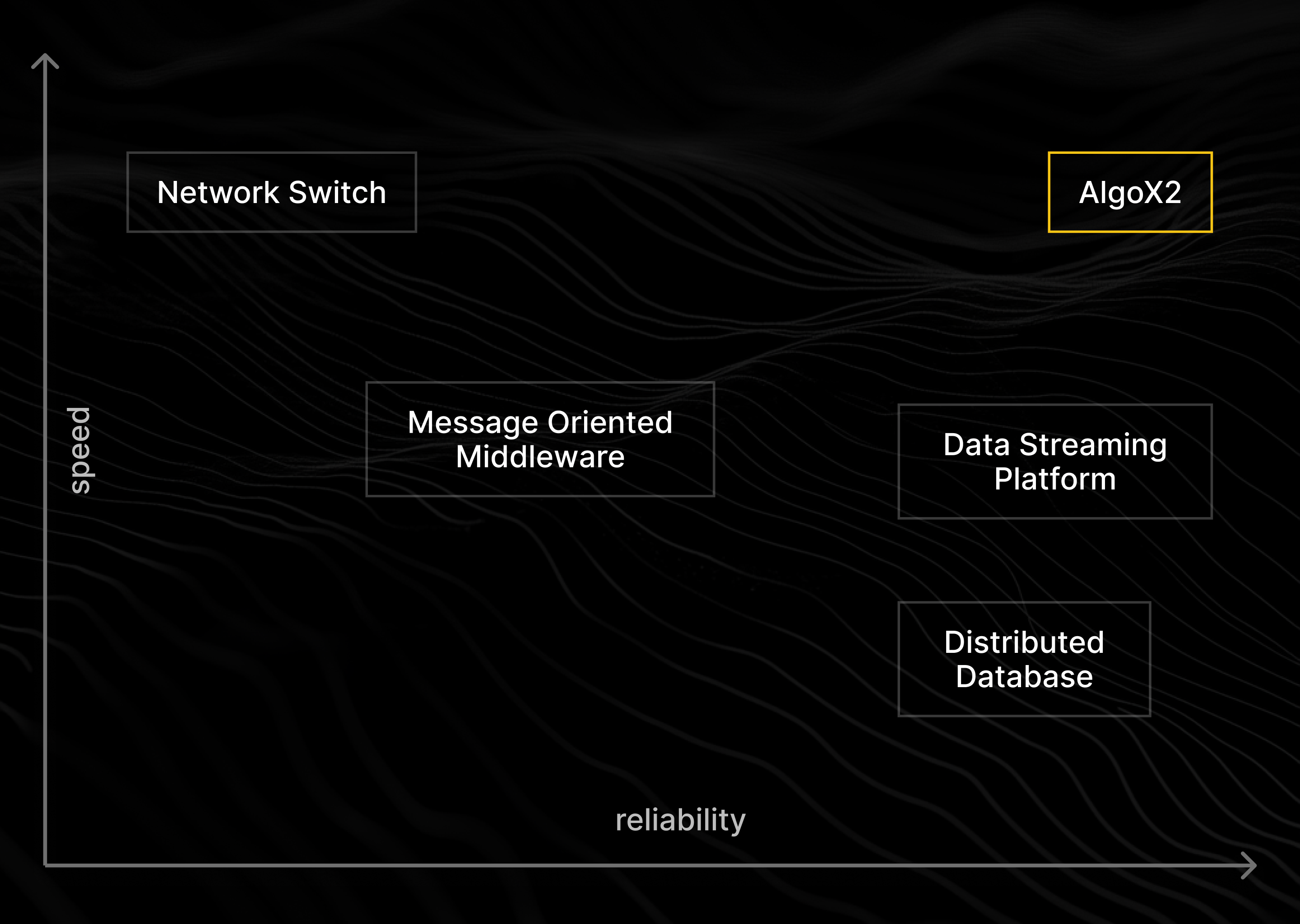

AlgoX2 is a clean-sheet approach, designed by the team behind the New York Stock Exchange's trading technology. It is Kafka-API compatible with native support for Redis, NATS, and MQTT—delivering up to 10X higher throughput and up to 90% lower TCO than legacy stacks, without compromising latency.

Why now

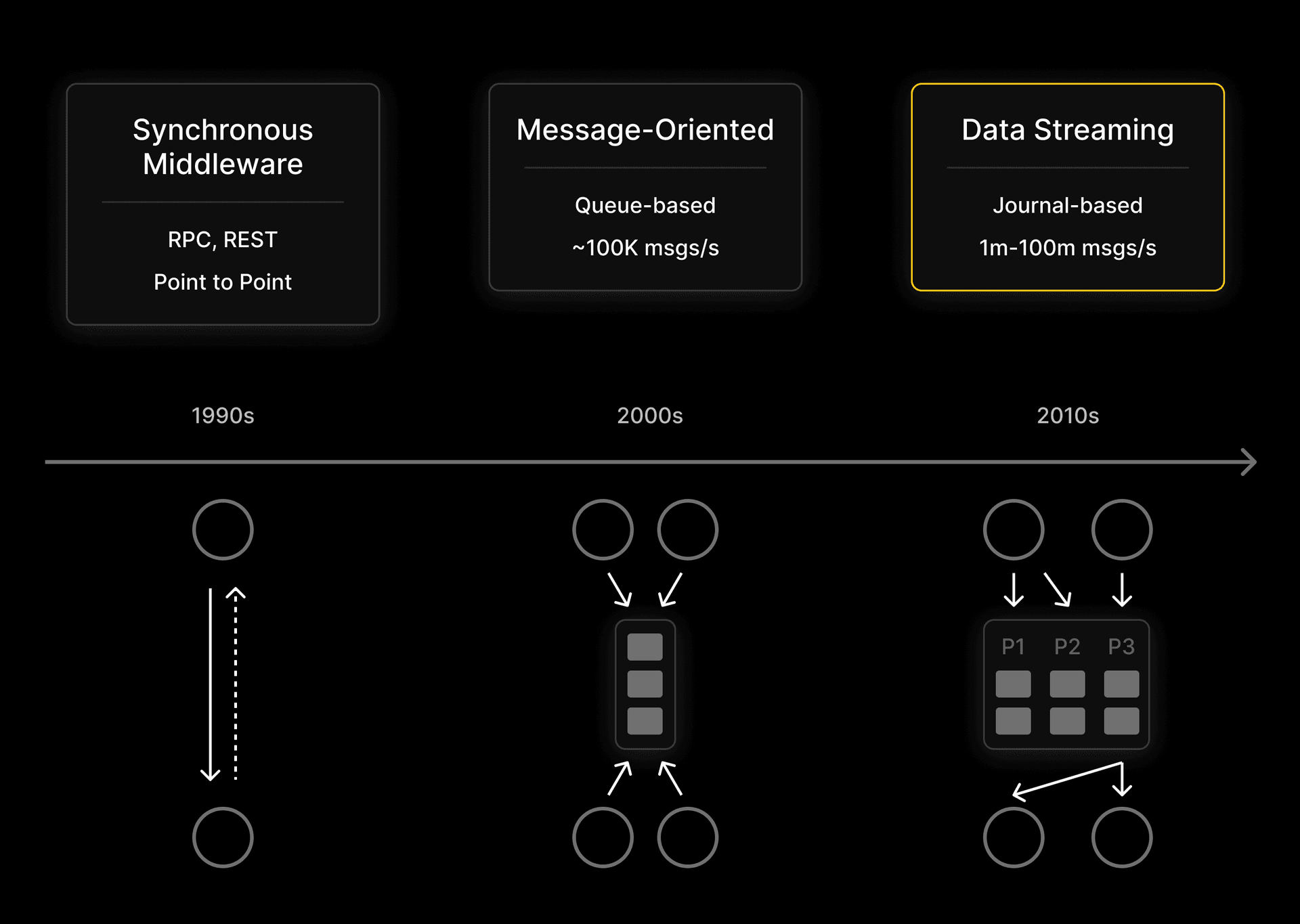

Enterprise data infrastructure both enables new products and adapts to every technological shift. Today, it is undergoing a generational transition driven by AI and the move to interoperable lakehouses in the Data 3.0 era. A growing share of enterprise data is in motion, with real-time use cases spanning AI assistants, personalization, fraud detection, and observability.

Streaming has moved from a supporting tool to a foundation: the speed, quality, and governance of data flows now determine AI-native outcomes. Kafka-era stacks shaped the last decade but were built for a different world. Under modern workloads, they struggle with performance, cost, and latency. The market needs a new streaming backbone built for Data 3.0.

Bessemer's perspective

Bessemer is proud to back founders building foundational infrastructure for the next wave of real-time innovation. In sectors like AI and finance, where speed is critical, AlgoX2 arms organizations with a purpose-built streaming backbone—helping them move fast, scale with confidence, and deliver dependable performance for tomorrow's data-driven world. In a future that runs on real-time experiences, Alexei, Vlad, and George are redefining what streaming should be: faster, leaner, and truly built for what's next.

Founders' perspective

Data streaming platforms transform fragmented data into the reliable flows that distributed applications depend on. We see a deep need for a solution that scales effortlessly, without endless tuning. Legacy streaming tools are built like straws; AlgoX2 is built like a firehose. It's engineered to handle the exponential data growth of generative AI, embrace existing protocols, and cut TCO by 10X — radically simplifying streaming for every enterprise.

Hardware has raced ahead with SSDs, NVMe, 100 GbE, and GPU-scale AI, but data software hasn't kept up. To fully use modern networks, storage, and CPUs, streaming needs a new architecture. AlgoX2 is that architecture: built for real-time efficiency and scale. No matter the setup, we maximize performance on off-the-shelf hardware and Bring Your Own Cloud (BYOC).

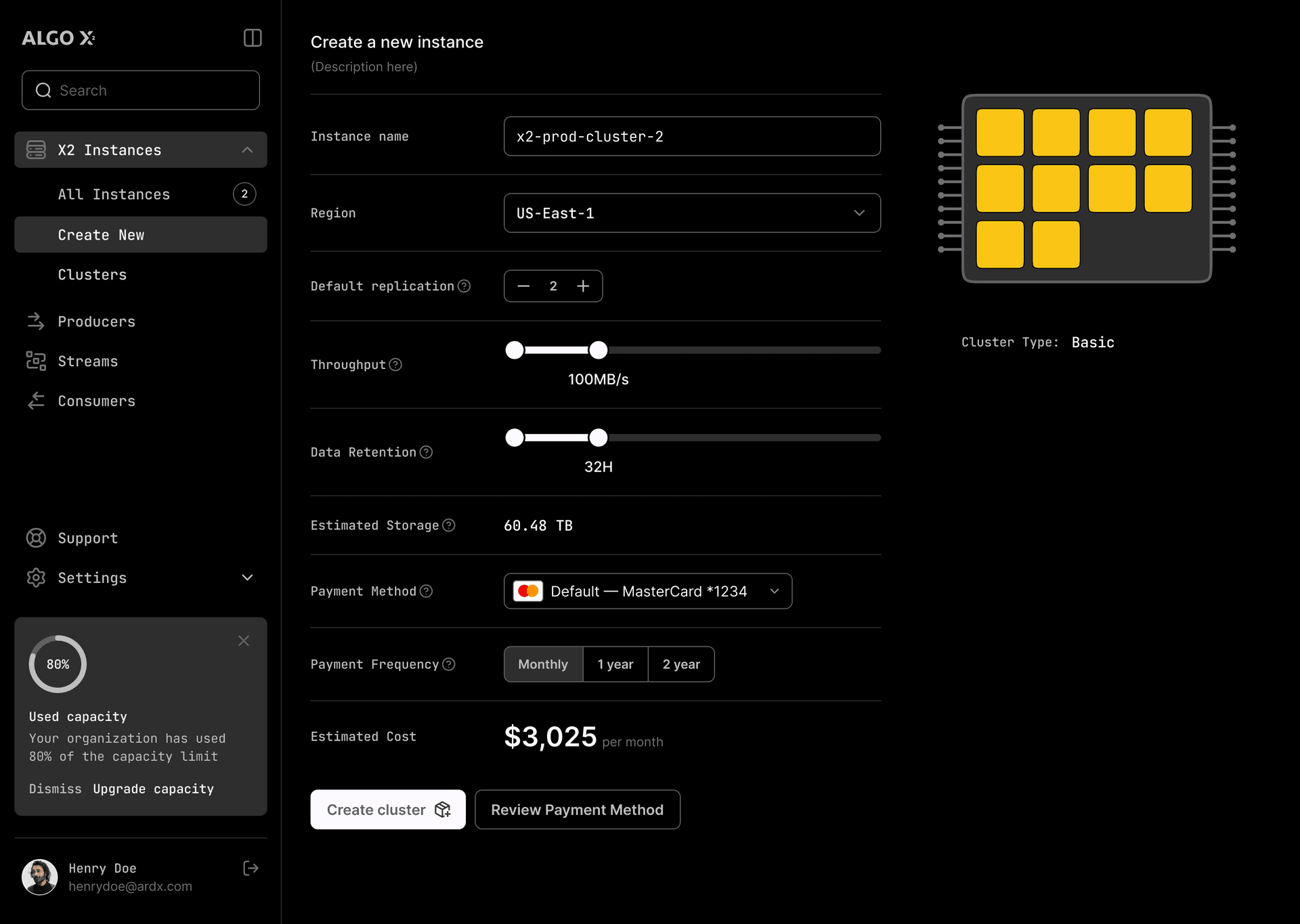

AlgoX2 can serve as the distributed layer for any streaming protocol, but we're starting with Kafka as the logical entry point. We offer full Kafka API compatibility and zero-code integration—teams can redirect data to our engine and immediately achieve up to 90% lower costs and 10 times higher throughput, while retaining the rich Kafka ecosystem of connectors and tools, without vendor lock-in.

Key Differentiators

-

Compute–storage separation, policy-driven tiering. Scale CPU and I/O independently; place hot data on NVMe and cold on object storage without reshuffling.

-

Kafka-compatible with inline processing. Keep your producers/consumers; add built-in transforms and enrichment for a shorter data path and lower tail latency.

-

AI-ready data plane. Compute features in-stream and land the same feed to Iceberg/Delta/Hudi with lineage and replay—keeping training and serving in sync.

What's next

We're working with major enterprises as design partners to bring AlgoX2 into production. With Bessemer's support, we're on a mission to make streaming the default backbone for AI-driven data infrastructure—fast, scalable, and affordable.